How to queue requests in nodejs to gain a huge performance boost

Intro

In this article we will learn a nifty trick, only possible in Node.js to increase your app's performance.

I've outlined the problem and the solution in one of my previous articles: How to serve 10x more request using asynchronous request batching.

That article was providing a solution for a callback based approach. In this article, we will use the async/await modern approach.

The problem

Often we have one or multiple high load endpoints in our applications. Where we expect thousands of concurrent requests.

When all the requests are coming at the same time, we can't use a caching mechanism, as the cache will be initialy empty, and all the requests will be hitting the database or other io operation, making caching useless.

Solution

Specifically in this case we can use a queueing mechanism.

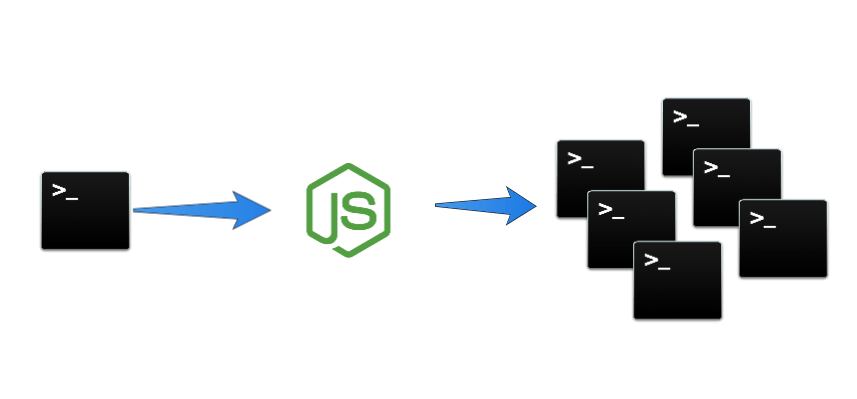

The idea is that as nodejs is single threaded, all requests and response objects will be served using the same thread.

We can use this to our advantage and create a queueing mechanism, and queue all the request that are comming in and wait for the first request to finish and then send the response to all the responses in the queue.

This way we will have only one asynchronous operation running at a time, but we will be able to serve a massive amount of concurrent requests.

Case study

Lets review the following nodejs (express) application

const express = require("express");

const axios = require("axios");

const app = express();

// we have the home page which will return the headers

// of the request by accessing a third party API

app.get("/", async (req, res) => {

try {

const headers = await getHeaders();

res.json({ headers });

} catch (e) {

res.status(500).json({ error: e.message });

}

});

app.listen(3000, () => {

console.log("Server is running http://localhost:3000/");

});

async function getHeaders() {

const response = await axios({

method: "get",

url: "http://headers.jsontest.com/",

});

return response.data;

}If we run the application and make a couple of requests, we will see that the response time is around 200ms.

Now let's stress test this app using ApacheBenchmark. We will make 100 concurrent requests for 20 seconds.

ab -c 1000 -t 10 http://localhost:3000/

Finished 2450 requests

Concurrency Level: 1000

Time taken for tests: 10.001 seconds

Complete requests: 2450

Failed requests: 1346

(Connect: 0, Receive: 0, Length: 1346, Exceptions: 0)

Total transferred: 1165174 bytes

HTML transferred: 652915 bytes

Requests per second: 244.97 [#/sec] (mean)

Time per request: 4082.109 [ms] (mean)

Time per request: 4.082 [ms] (mean, across all concurrent requests)

Transfer rate: 113.77 [Kbytes/sec] receivedWe can see that the requests per second are around 244.

Queueing requests

Let's try to improve this by using a queueing mechanism.

const express = require("express");

const axios = require("axios");

const app = express();

let queue = [];

app.get("/", async (req, res) => {

// if our queue is empty this means is the first request in the batch

if (queue.length === 0) {

// we will add our response object in a special queue

queue.push(res);

try {

// do our asynchronous operation which takes time

const headers = await getHeaders();

// once the operation is done we will send the response to all the responses in the queue

for (const res of queue) {

res.json({ headers });

}

// very important is to clean up the queue after we are done

queue = [];

} catch (e) {

// we do a similar thing if we have an error

for (const res of queue) {

res.status(500).json({ error: e.message });

}

// clean the queue

queue = [];

}

} else {

// in case we already starte our asynchronous operation we will add the response to the queue

queue.push(res);

// till our operation is running we will queue all the responses

// this will allow us to have a massive amount of concurrent requests

// but do only one asynchronous operation running at a time

}

});

app.listen(3000, () => {

console.log("Server is running http://localhost:3000/");

});

async function getHeaders() {

const response = await axios({

method: "get",

url: "http://headers.jsontest.com/",

});

return response.data;

}Let's run the same stress test again

ab -c 1000 -t 10 http://localhost:3000/

Finished 21239 requests

Concurrency Level: 1000

Time taken for tests: 10.107 seconds

Complete requests: 21239

Failed requests: 11430

(Connect: 0, Receive: 0, Length: 11430, Exceptions: 0)

Total transferred: 10534090 bytes

HTML transferred: 5902023 bytes

Requests per second: 2101.49 [#/sec] (mean)

Time per request: 475.854 [ms] (mean)

Time per request: 0.476 [ms] (mean, across all concurrent requests)

Transfer rate: 1017.86 [Kbytes/sec] receivedWe can see that the requests per second are around 2101, which is 10x more than the previous result.

We can also see that the response time is around 0.5ms, which is 8x faster than the previous result.

Conclusion

We can see that queueing requests/responses can drastically improve the performance of our application. The pseudo code for the technique can be summarized in the following steps:

create queue for each endpoint to be optimized

// if your endpoint has one or multiple arguments

// then you can create a map of queues where the key is the arguments

in each endpoint handler

if queue is empty

add response to queue

do asynchronous operation

send response to all responses in the queue

clean up the queue

else

add response to queue